In a world increasingly reliant on artificial intelligence, the pursuit of smarter, more capable reasoning models stands at the forefront of technological advancement. OpenAI, a frontrunner in AI research, has recently unveiled its latest iteration of reasoning models, designed to enhance the way machines interpret and process information. Yet, amidst the excitement of innovation lies a crucial examination: the basic errors that can emerge even from the most sophisticated algorithms. This article delves into the nuances of OpenAI’s recent developments, spotlighting the challenges and missteps that accompany the quest for near-perfect reasoning. As we explore the capabilities and limitations of these models, we invite readers to consider the implications they hold for the future of AI and its role in our daily lives.

Table of Contents

- Understanding the Mechanisms Behind Common Missteps in Reasoning Models

- Analyzing Case Studies of OpenAIs Recent Model Errors

- Strategies for Enhancing Accuracy in AI Reasoning Capabilities

- Recommendations for Developers: Navigating Limitations in AI Interpretation

- Q&A

- Concluding Remarks

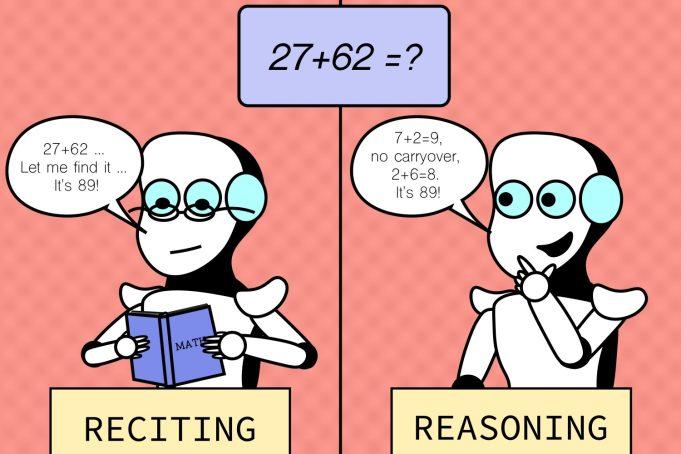

Understanding the Mechanisms Behind Common Missteps in Reasoning Models

The intricacies of reasoning models can often lead to pitfalls that compromise their effectiveness. One of the primary issues arises from the overreliance on patterns that can generate misleading conclusions. These models typically analyze data inputs to identify correlations, but in some cases, they misinterpret these relationships, attributing causation where there is none. For instance, a model might observe that increases in ice cream sales coincide with a rise in drowning incidents, leading to a faulty conclusion that eating ice cream causes drowning, rather than recognizing that both are influenced by warmer weather. This highlights the necessity for a deeper contextual understanding in data interpretation.

Furthermore, the failure to accommodate anomalies within the dataset can result in skewed outcomes. Models often operate under the assumption that the data follows a certain distribution, which may not always hold true, especially with real-world data laden with outliers or exceptions. When a reasoning model encounters data points that diverge significantly from established trends, its responses can become erratic. Recognizing and addressing these anomalies is critical for improving model robustness. Below is a concise table illustrating some common missteps in reasoning models along with their potential impacts:

| Common Misstep | Potential Impact |

|---|---|

| Overgeneralization | Leads to false conclusions based on limited data. |

| Ignoring Contextual Factors | Produces skewed results that fail to reflect real-world complexities. |

| Misidentifying Correlation as Causation | Can result in misguided strategies based on faulty assumptions. |

| Inadequate Handling of Outliers | Causes unforeseen variability and undermines predictive accuracy. |

Analyzing Case Studies of OpenAIs Recent Model Errors

Examining the recent performance of OpenAI’s reasoning model reveals a pattern of basic errors that can have significant implications on outputs. Some notable instances include:

- Misinterpretation of Questions: The model has occasionally provided answers that diverge from the context of the questions posed, indicating flaws in comprehension.

- Logical Fallacies: Instances of circular reasoning and false dichotomies have been observed in responses, undermining the credibility of the conclusions drawn.

- Inconsistent Output: The same query can yield varying results, reflecting a lack of stability in reasoning processes.

The aftermath of these errors raises questions about the training data and methodologies employed. To illustrate the recent lapses, consider the following table that highlights common errors:

| Error Type | Example | Impact |

|---|---|---|

| Misinterpretation | Question about solar energy policy resulting in historical data | Obfuscates current discussions, leading to misinformation |

| Logical Fallacy | Claiming “A leads to B, therefore B implies A” | Weakens argument strength and logic |

| Inconsistency | Answering a math question with two different values | Creates confusion and erodes trust in the model |

Strategies for Enhancing Accuracy in AI Reasoning Capabilities

Enhancing the accuracy of AI reasoning capabilities involves a multi-faceted approach, focusing on refining both the training process and the end-user experience. One key strategy is the implementation of robust feedback loops, where AI systems are exposed to real-world data and corrections from users. This active learning not only tunes the model’s algorithms but also engages users in the iterative process, fostering a shared understanding in problem-solving. Additionally, contextual awareness can be reinforced by integrating knowledge graphs that interlink different data points, allowing AI to reason with a broader perspective and make more informed decisions.

Another important strategy is the utilization of ensemble methods, where multiple models with diverse architectures collaborate to arrive at a consensus output. This collective reasoning can mitigate individual model biases and errors, leading to greater accuracy. Furthermore, the importance of transparency in reasoning processes cannot be overstated; by developing systems that allow users to trace the logic behind AI decisions, developers can empower users and potentially uncover areas for improvement. The following table summarizes these strategies:

| Strategy | Description |

|---|---|

| Robust Feedback Loops | Incorporating user feedback to improve model performance. |

| Contextual Awareness | Using knowledge graphs to enhance information interlinking. |

| Ensemble Methods | Combining multiple models for improved reliability. |

| Transparent Reasoning | Allowing users to understand AI decision-making processes. |

Recommendations for Developers: Navigating Limitations in AI Interpretation

In light of the recent insights into OpenAI’s reasoning model, it’s essential for developers to approach AI interpretation with a discerning eye. Understanding the limitations of these models can prevent misapplications and enhance the efficacy of AI systems. Here are some strategic recommendations to consider:

- Foster a culture of skepticism: Encourage team members to critically evaluate AI outputs rather than accepting them at face value.

- Integrate human oversight: Utilize teams of domain experts to review and validate AI interpretations, particularly in high-stakes environments.

- Embrace iterative testing: Implement continuous testing cycles to identify common errors and areas for improvement in AI reasoning.

- Invest in user education: Ensure that end-users are aware of possible limitations and understand how to interpret AI-generated content effectively.

Moreover, developers can benefit from establishing robust debugging frameworks that specifically target AI reasoning flaws. By creating clear documentation and feedback loops, developers can refine the model’s performance and make informed adjustments. Consider the following framework as a starting point:

| Challenge | Proposed Solution |

|---|---|

| Misinterpretation of data | Use annotated datasets for training |

| Lack of context | Incorporate contextual embeddings |

| Overgeneralization | Employ fine-tuning techniques |

Q&A

Q&A: OpenAI’s Recent “Reasoning” Model and its Basic Errors

Q1: What is OpenAI’s newest reasoning model?

A1: OpenAI’s latest reasoning model is an advanced artificial intelligence system designed to improve the way AI systems understand and process complex information. This model focuses on tasks that require logical thinking, problem-solving, and a deeper comprehension of underlying concepts.

Q2: How does this model differ from previous iterations?

A2: Unlike earlier models that primarily leveraged pattern recognition, this new model incorporates more sophisticated techniques for logical reasoning and inference. It aims to bridge the gap between mere data processing and genuine understanding, enabling it to tackle intricate problems and generate more insightful responses.

Q3: What types of tasks is the model designed to perform?

A3: The model is particularly aimed at tasks such as mathematical reasoning, causal inference, and context-based decision-making. It’s intended for applications in various fields, from education to advanced scientific research, where complex reasoning is essential.

Q4: What are some of the basic errors that have been identified in this model?

A4: Despite its advancements, the model has exhibited a range of basic errors, including misinterpretations of context, incorrect applications of logical principles, and failures in sequential reasoning. These errors suggest that while the model can handle basic logic, it still struggles with nuanced or ambiguous information.

Q5: Can you provide an example of a basic error made by the model?

A5: One notable example involved the model incorrectly solving a mathematical word problem due to a misinterpretation of key terms. Instead of identifying the relevant variables accurately, it applied a sequence of operations that did not align with the problem’s requirements, leading to an erroneous conclusion.

Q6: How has OpenAI responded to these issues?

A6: OpenAI has acknowledged these basic errors and is actively working on improving the model through iterative updates. They are focusing on refining its training data and enhancing its algorithms to better equip the model to understand and navigate complex reasoning tasks.

Q7: What implications do these errors have for the future use of reasoning models?

A7: The presence of basic errors highlights the ongoing challenges in achieving true artificial intelligence that can reason like a human. It underscores the necessity for continuous learning and adaptation in AI systems. For users and developers, it serves as a reminder to critically evaluate AI outputs and maintain an understanding of their limitations.

Q8: What does the future hold for AI reasoning models?

A8: As research progresses, we can expect future models to become more proficient at understanding context and applying logic effectively. Improvement in multi-modal reasoning—integrating visual, textual, and numerical information—will likely enhance AI’s overall capabilities, leading to more reliable applications across various industries.

Q9: How can users best engage with this technology given its current limitations?

A9: Users are encouraged to approach the technology with a blend of optimism and caution. While it offers remarkable potential, critical thinking should accompany its use. Engaging with AI outputs skeptically and verifying the results can help mitigate any risks associated with its basic errors.

Q10: Where can readers learn more about OpenAI’s reasoning model and its developments?

A10: Readers can explore OpenAI’s official blog and research publications, which frequently publish updates on advancements, technical reports, and user guidelines. Engaging with community forums and discussions around AI can also provide valuable insights into real-world applications and challenges.

Concluding Remarks

OpenAI’s latest foray into the realm of reasoning models represents a significant leap forward in artificial intelligence. While the advancements are notable, the model’s occasional missteps highlight the complexities inherent in developing systems that aim to mimic human cognition. These basic errors serve as reminders that even the most sophisticated algorithms are still learning and evolving. As we continue to explore the potential of AI, it is crucial to approach these technological advancements with a blend of optimism and critical scrutiny. The journey towards truly intelligent systems is ongoing, and with each iteration, we edge closer to unlocking the full potential of artificial reasoning. Ultimately, understanding and addressing these errors can pave the way for a more nuanced and effective interaction between humans and machines, forging pathways for innovation that are as exciting as they are complex.

Baddiehub This was beautiful Admin. Thank you for your reflections.

ivermectin 6 mg without prescription – buy atacand 8mg sale order carbamazepine

isotretinoin 10mg tablet – dexamethasone price order zyvox 600 mg for sale

buy amoxicillin tablets – order ipratropium 100 mcg pills buy cheap generic combivent

Noodlemagazine Superb post! We will share this excellent piece on our platform. Keep up the outstanding work

azithromycin 250mg cost – azithromycin 250mg generic nebivolol oral

prednisolone us – azithromycin 250mg oral order progesterone generic

purchase gabapentin online cheap – sporanox price order itraconazole 100 mg pill

buy lasix 40mg online cheap – order piracetam online betamethasone online

buy generic acticlate – glucotrol online order glucotrol brand

augmentin 1000mg sale – order nizoral 200mg pills cheap duloxetine

augmentin pills – order duloxetine 40mg sale order duloxetine without prescription

buy semaglutide 14 mg – buy generic levitra over the counter periactin 4mg uk

tizanidine buy online – plaquenil 200mg uk order microzide online cheap

order generic sildenafil 50mg – viagra order online tadalafil 40mg tablet

buy cialis 10mg pills – cialis 40mg price sildenafil 50 mg

cenforce medication – cenforce 50mg without prescription buy glucophage

order atorvastatin 20mg generic – buy generic amlodipine order lisinopril 10mg generic

purchase prilosec online – buy tenormin 100mg online cheap buy tenormin online cheap

medrol 16mg oral – lyrica 75mg uk buy aristocort 10mg for sale

order generic clarinex – order claritin 10mg online cheap dapoxetine 60mg price

order misoprostol 200mcg generic – xenical 120mg for sale purchase diltiazem generic

buy zovirax 400mg online cheap – acyclovir 800mg without prescription buy cheap rosuvastatin

motilium 10mg generic – cost flexeril 15mg flexeril 15mg price

order motilium sale – buy domperidone tablets flexeril oral

cheap propranolol – buy inderal 10mg without prescription buy methotrexate no prescription

order medex for sale – buy medex for sale hyzaar buy online

buy nexium 20mg generic – imitrex cost imitrex order online

levaquin online buy – levaquin 500mg tablet cheap zantac 150mg

buy meloxicam 15mg sale – meloxicam 15mg tablet cost flomax 0.4mg

order generic ondansetron 4mg – spironolactone over the counter zocor 10mg over the counter

generic valacyclovir 500mg – propecia 1mg oral buy generic fluconazole 100mg

order provigil 200mg pill buy modafinil generic how to get modafinil without a prescription provigil online buy buy provigil 100mg for sale provigil online order buy provigil generic

This is the kind of glad I take advantage of reading.

More posts like this would add up to the online play more useful.

zithromax generic – oral sumycin 250mg how to buy metronidazole

semaglutide oral – order semaglutide 14 mg generic periactin 4 mg cost

motilium medication – order motilium pills order flexeril 15mg generic

inderal 10mg for sale – buy clopidogrel 150mg generic generic methotrexate

generic amoxicillin – ipratropium 100mcg canada buy generic combivent 100 mcg

buy zithromax pills – oral tindamax buy generic bystolic over the counter

purchase augmentin online – at bio info acillin uk

buy nexium 20mg online – https://anexamate.com/ esomeprazole 20mg for sale

buy medex cheap – cou mamide losartan online buy

mobic usa – https://moboxsin.com/ purchase mobic

oral prednisone – https://apreplson.com/ purchase prednisone

best over the counter ed pills – fastedtotake.com ed pills no prescription

amoxicillin order online – https://combamoxi.com/ buy generic amoxil online

order fluconazole 200mg pill – https://gpdifluca.com/# fluconazole 100mg pill

cenforce 100mg price – cenforcers.com buy cenforce pills

tadalafil with latairis – cheap cialis online overnight shipping cost of cialis for daily use

when will cialis be over the counter – https://strongtadafl.com/# cialis dapoxetine overnight shipment

order zantac 300mg sale – zantac 300mg without prescription buy generic ranitidine online

viagra 50mg price – https://strongvpls.com/# cheap viagra in london

More posts like this would create the online elbow-room more useful. this

This is the kind of enter I recoup helpful. https://buyfastonl.com/amoxicillin.html

This is the stripe of glad I enjoy reading. https://ursxdol.com/ventolin-albuterol/

Thanks for posting. It’s a solid effort.

The reconditeness in this ruined is exceptional. https://prohnrg.com/product/rosuvastatin-for-sale/

Such a beneficial insight.

I am in fact delighted to glance at this blog posts which consists of tons of useful facts, thanks for providing such data. https://aranitidine.com/fr/acheter-propecia-en-ligne/

I discovered useful points from this.

Thanks for sharing. It’s a solid effort.

I took away a great deal from this.

More content pieces like this would make the online space a better place.

I truly enjoyed the style this was laid out.

I discovered useful points from this.

I gained useful knowledge from this.

I learned a lot from this.

More articles like this would make the blogosphere better.

I’ll certainly return to read more.

This submission is incredible.

The depth in this piece is commendable.

I couldn’t turn down commenting. Warmly written! https://ondactone.com/simvastatin/

The detail in this article is praiseworthy.

This is a topic which is near to my fundamentals… Diverse thanks! Unerringly where can I upon the acquaintance details for questions?

https://doxycyclinege.com/pro/levofloxacin/

Thanks on putting this up. It’s understandably done. http://sols9.com/batheo/Forum/User-Toaqpr

purchase dapagliflozin – site dapagliflozin for sale online

cost xenical – xenical without prescription xenical 60mg for sale

More posts like this would prosper the blogosphere more useful. http://www.underworldralinwood.ca/forums/member.php?action=profile&uid=493579

You can keep yourself and your stock by being wary when buying prescription online. Some pharmacy websites operate legally and provide convenience, secretiveness, sell for savings and safeguards for purchasing medicines. buy in TerbinaPharmacy https://terbinafines.com/product/orlistat.html orlistat

This is the kind of topic I take advantage of reading. online

This is the kind of writing I in fact appreciate.

https://t.me/s/Top_BestCasino/173

https://t.me/s/Top_BestCasino/173

https://t.me/s/officials_pokerdom/3445

https://t.me/officials_pokerdom/3880

https://t.me/officials_pokerdom/3175

https://t.me/s/iGaming_live/4866

https://t.me/s/iGaming_live/4866

https://t.me/s/iGaming_live/4866

https://t.me/s/Irwin_officials

https://t.me/s/BEeFcaSIno_OfFiCiALS

https://t.me/s/Martin_casino_officials

What a material of un-ambiguity and preserveness of precious familiarity concerning unpredicted emotions.

Find Female Escorts in Brazil

https://t.me/s/iGaming_live/4871

https://t.me/s/iGaming_live/4871

https://t.me/dragon_money_mani/10

https://t.me/dragon_money_mani/40

https://t.me/s/be_1win/796

https://t.me/s/iGaming_live/4873

https://t.me/s/Martin_officials

Hey! I know this is kinda off topic nevertheless I’d figured I’d ask. Would you be interested in trading links or maybe guest writing a blog post or vice-versa? My website addresses a lot of the same subjects as yours and I feel we could greatly benefit from each other. If you’re interested feel free to send me an email. I look forward to hearing from you! Fantastic blog by the way!

рейтинг топ русских казино лучшие

https://t.me/s/kAzIno_S_MiniMalNYM_depoZITOM/9

https://1wins34-tos.top

https://t.me/s/minimalnii_deposit/94

https://t.me/s/KAZINO_S_MINIMALNYM_DEPOZITOM

Outstanding post but I was wanting to know if you could write a litte more on this topic? I’d be very thankful if you could elaborate a little bit further. Kudos!

https://seal.dp.ua/bi-led-linzy-taochis-3-dyuima-24v-zamina.html

whoah this blog is fantastic i love studying your posts. Stay up the good work! You realize, many persons are hunting around for this info, you could help them greatly.

tezina bvd auta na struju

For most up-to-date news you have to go to see world-wide-web and on web I found this site as a best web page for hottest updates.

byueuropaviagraonline

India vs Australia livescore, cricket rivalry matches with detailed scoring updates

В мире игр, где каждый ресурс стремится зацепить обещаниями быстрых призов, рейтинг казино на рубли

является именно той ориентиром, которая проводит через заросли обмана. Тем профи и новичков, которые устал из-за фальшивых обещаний, он средство, чтоб увидеть подлинную rtp, словно вес ценной ставки на пальцах. Минус лишней ерунды, просто реальные клубы, там выигрыш не только показатель, а реальная удача.Составлено по яндексовых запросов, будто паутина, которая вылавливает топовые свежие тренды по сети. Тут нет пространства про шаблонных трюков, каждый момент как ставка у покере, там подвох раскрывается немедленно. Хайроллеры видят: в стране манера разговора и сарказмом, там юмор притворяется под намёк, помогает обойти обмана.На http://www.don8play.ru/ такой топ лежит будто открытая карта, приготовленный к игре. Загляни, коли нужно почувствовать пульс подлинной ставки, без обмана и неудач. Тем кто любит вес выигрыша, такое словно держать фишки у руках, минуя смотреть в экран.

https://t.me/s/russia_cAsINo_1wIN

trucking compliance paperwork

пхи пхи ко ланта ко ланта как добраться

курсы трак диспетчера

ко ланта ко ланте

системы оповещения и управления эвакуацией в Москве, монтаж систем оповещения и управления эвакуацией, монтаж систем оповещения и управления эвакуацией в Москве, монтаж СОУЭ, монтаж СОУЭ в Москве Системы автоматической противопожарной защиты (АППЗ) – это передовая технология, направленная на автоматическое тушение пожара. Монтаж систем автоматической противопожарной защиты, в том числе монтаж систем автоматической противопожарной защиты в Москве, требует глубоких знаний и опыта. Качественный монтаж АППЗ, а также монтаж АППЗ в Москве, значительно повышает уровень безопасности объекта.

ко ланте ко ланте

ко ланта ко ланте

ко ланте ко ланте

ко ланта ко ланта

мод на тик ток 2026 Безопасность и Законность: Важные Предостережения Использование TikTok модов сопряжено с определенными рисками. Загрузка приложений из неофициальных источников может привести к установке вредоносного ПО. Кроме того, нарушение условий использования TikTok может повлечь за собой блокировку аккаунта. Поэтому, прежде чем “скачать TikTok мод на андроид бесплатно” или любую другую версию, важно взвесить все “за” и “против”.

эконом-туры Экскурсии по Сахалину позволят прикоснуться к тайнам японского наследия, увидеть памятники истории, а также насладиться природным великолепием мысов и маяков, разбросанных вдоль побережья.

ко ланте ко ланте

Valuable information. Fortunate me I discovered your website accidentally, and I am surprised why this twist of fate did not took place earlier! I bookmarked it.

RioBet

бездепозитный бонус за регистрацию Бездепозитный Бонус за Регистрацию: Начни Побеждать Прямо Сейчас! Получить бездепозитный бонус за регистрацию – это отличный способ познакомиться с функционалом казино, протестировать различные игры и, возможно, даже выиграть реальные деньги, не рискуя собственными средствами. Просто зарегистрируйтесь, получите свой бонус и начинайте играть!

чистка после воды iphone помощь с apple id великий новгород

удаленная работа озон удаленная работа яндекс

замена аккумулятора на iphone 15 ремонт honor великий новгород

Hi there i am kavin, its my first occasion to commenting anyplace, when i read this post i thought i could also make comment due to this brilliant paragraph.

https://vuonnhaba.vn/les-etapes-essentielles-pour-installer-iron-tv-pro-sur-smart-tv-samsung/

https://dtf.ru/pro-smm

краби или ко чанг путешествие на краби

печать на пакетах Бумажные пакеты с логотипом срочно – стильное решение для тех, кому важна скорость. Быстрое изготовление и печать логотипа на бумажных пакетах.

замена аккумулятора на iphone 15 pro ремонт ноутбуков великий новгород

лучшие пляжи ко ланты krabi resort 4 таиланд краби

не работает gps iphone ремонт смартфонов великий новгород

Ставки на спорт Прогнозы на хоккей – это попытка заглянуть в будущее ледовой баталии. Это анализ статистики, учет травм и дисквалификаций, оценка мотивации команд и многое другое. Помните, что прогноз – это не гарантия, а лишь вероятность.

франшиза клининга Эксперт клининг – это команда профессионалов, обладающих глубокими знаниями и опытом в сфере уборки, готовых предложить решения для самых сложных задач.

краби где лучше храм тигра в тайланде краби на карте

I’d like to find out more? I’d love to find out more details.

banda casino зеркало

Фонбет Ставки на спорт – это симфония риска и расчета, где каждая нота – это отдельный вид спорта, а дирижер – это игрок, принимающий решения, основанные на анализе, интуиции и, конечно, удаче. Здесь знание статистики, состава команд, мотивации игроков сплетается с умением чувствовать момент, предвидеть неожиданные повороты событий и сохранять хладнокровие в моменты напряжения. Это искусство управления рисками, где даже самый опытный маэстро может сорваться на фальшь, но только мастерство позволяет ему вернуться в строй и довести симфонию до победного финала.

Avia Masters de BGaming es un juego crash con RTP del 97% donde apuestas desde 0,10€ hasta 1.000€, controlas la velocidad de vuelo de un avion que recoge multiplicadores (hasta x250) mientras evita cohetes que reducen ganancias a la mitad, con el objetivo de aterrizar exitosamente en un portaaviones para cobrar el premio acumulado

https://share.google/ovATVgH9hPeFbw6OZ

Новости России и Мира В Подмосковье раскрыта крупная сеть по производству и сбыту контрафактной алкогольной продукции. В ходе спецоперации изъято несколько тонн поддельного спиртного, а также оборудование для его изготовления. Возбуждено уголовное дело, ведется расследование.

Avia Masters de BGaming es un juego crash con RTP del 97% donde apuestas desde 0,10€ hasta 1.000€, controlas la velocidad de vuelo de un avion que recoge multiplicadores (hasta x250) mientras evita cohetes que reducen ganancias a la mitad, con el objetivo de aterrizar exitosamente en un portaaviones para cobrar el premio acumulado

https://share.google/mEtFNQTFs0mYIfXaH

Купить Купить

жизнь Мир кино и литературы – это мои проводники в другие реальности. Я погружаюсь в сложные сюжеты, сопереживаю героям и нахожу ответы на вопросы, которые задает мне жизнь. Делаю обзоры на сериалы и фильмы – это мой способ поделиться своими эмоциями, разложить увиденное на составляющие и пригласить вас к дискуссии. Мое мнение – это не истина в последней инстанции, это лишь отправная точка для новых открытий.

эвакуатор Мариуполь Эвакуатор Воноваха – эвакуация в сложных прифронтовых условиях.

букеты на заказ с доставкой недорого Свежий букет быстрый сервис

https://auto.qa/sale/car/all/

цветы заказать москва недорого Срочная служба свежих букетов

вскрытие замков Профессиональное вскрытие замков любой сложности без повреждений. Круглосуточный выезд!

Ремонт мебели Ремонт плитки

книги Корги – это маленькое солнышко на четырех лапах, символ радости и беззаботности. Их забавные мордочки, неуклюжие лапки и бесконечная преданность вдохновляют меня каждый день. Их присутствие в моей жизни наполняет её теплом и светом.

рунетки чат рунетки онлайн

https://www.diveboard.com/jasonejohnson11/posts/discover-the-perfect-gas-fireplace-for-every-home-B2upQ9C When I first began researching gas fireplaces, I didn’t expect the market to be this diverse. There are major differences between brands in terms of materials, burner technology, and flame realism. Going through detailed specs and user experiences helped me understand what actually matters in the long run — things like maintenance access, fuel efficiency, and consistent performance. It’s definitely not something you want to choose purely based on price or visual design alone

как устроиться хостес в корею работа хостес азия

тикток мод 2026 тикток мод последний 2026

рунетки рунетки чат

доехать бангкок краби где находится краби в тайланде

парфюмерия дешево Парфюмерия дешево – скидки, акции и распродажи любимых ароматов. Экономьте без потери качества!

this page Gas fireplaces have come a long way in terms of efficiency and safety compared to earlier generations. During my research into different brands is that even within the same fuel type, heat distribution and control precision can differ significantly. Some brands prioritize smart controls and flame customization, while others are built around long-term reliability and simpler engineering. Being aware of these distinctions really helps when selecting a fireplace that fits both the house layout and how often it will be used during the heating season.

El nГєcleo principal de AviaMasters es su revolucionario sistema aГ©reo. A diferencia de los demГЎs juegos del gГ©nero, aquГ ademГЎs de ver, sino que tambiГ©n vives la emociГіn de cada elecciГіn.

https://aviamasters.nom.es/

https://withwhomis.top/larrybrown190/how-to-make-your-home-a-cozy-retreat-for-every-season

Choosing a heater can be surprisingly detailed once you start comparing brands like Comfort Glow with other home heating options. Differences in construction quality, heating technology, and usability become more noticeable after reading real usage feedback.

It became clear that looking beyond spec sheets and focusing on how heaters perform in typical household conditions makes the decision easier. Dependable operation, safety features, and even heat tend to matter more than extra features in the long run.

Трюмо трехстворчатое зеркало Зеркало поворотное от пола до потолка: оригинальный дизайн и функциональность.

ключ тг Следите за скидками в Steam: самые выгодные предложения и акционные цены на ваши любимые игры!

шторы на заказ Пошив штор – это искусство преображения пространства, способ придать интерьеру индивидуальность и уют. Независимо от того, ищете ли вы лаконичные занавеси для кухни или роскошные портьеры для гостиной, качественный пошив играет ключевую роль в создании желаемого эффекта.

http://free-health.ru/application/pages/?promokod_1xbet_bonus_pri_registracii_6500_rubley.html

https://archiwum-obieg.u-jazdowski.pl/newsletter-obiegpl/35925#comment-15553

https://topnet.vn/wp-includes/articles/?1xbet_promo_code_new_user___vip_welcome_bonus.html

Зеркало на стену поворотное Гримерные зеркала: профессиональное освещение для идеального макияжа.

зип пакеты купить в Москве Купить Zip пакеты с логотипом – это эффективный способ брендирования и продвижения вашего бизнеса. Нанесение логотипа на пакеты позволяет сделать вашу упаковку узнаваемой и запоминающейся, повышая лояльность клиентов и укрепляя имидж компании. Такая упаковка не только выполняет свою прямую функцию – сохранение товара, но и становится мощным инструментом маркетинга.

изготовление картонной упаковки Картонные упаковки – это маркетинговый инструмент, позволяющий создать яркий и запоминающийся образ бренда. Дизайн, графика и качественная печать превращают обычную коробку в эффективный рекламный носитель, привлекающий внимание и стимулирующий продажи.

http://maciejsporysz.eu/index.php/component/kunena/4/14632—melbet-2026-max888–15000#14632

секс чат москва

секс чат без регистрации

http://cafe5.winnen.co.kr/bbs/board.php?bo_table=free&wr_id=2629241

code promo 1xbet creation de compte

бездепозитные промокоды Бонусы за регистрацию – это стандартная практика в индустрии онлайн-гемблинга. Они служат стимулом для игроков выбрать именно это казино среди множества других. Бонусы могут быть разными: от бесплатных вращений до бонусных денег на счет.

бездепозитные бонусы Добро пожаловать в захватывающий мир онлайн-казино, где удача и азарт переплетаются, открывая двери к невероятным возможностям! Для всех ценителей острых ощущений и желающих испытать свою фортуну, мы подготовили эксклюзивные предложения, способные сделать ваш игровой опыт еще более ярким и прибыльным.

metro ключи ключи steam

low deposit online casino

gambling site

online casino with fast payouts

va betmgm betmgm-play betmgm Oklahoma